The allure of quantum computers is, at its heart, quite simple: by leveraging counterintuitive quantum effects, they could perform computational feats utterly impossible for any classical computer. But reality is more complex: to date, most claims of quantum “advantage”—an achievement by a quantum computer that a regular machine can’t match—have struggled to show they truly exceed classical capabilities. And many of these claims involve contrived tasks of minimal practical use, fueling criticisms that quantum computing is at best overhyped and at worst on a road to nowhere.

Now, however, a team of researchers from JPMorganChase, quantum computing firm Quantinuum, Argonne National Laboratory, Oak Ridge National Laboratory and the University of Texas at Austin seems to have shown a genuine advantage that’s relevant to real-life issues of online security. The group’s results, published recently in Nature, build upon a previous certification protocol—a way to check that random numbers were generated fairly—developed by U.T. Austin computer scientist Scott Aaronson and his former postdoctoral researcher Shih-Han Hung.

Using a Quantinuum-developed quantum computer in tandem with classical, or traditional, supercomputers at Argonne and Oak Ridge, the team demonstrated a technique that achieves what is called certified randomness. This method generates random numbers from a quantum computer that are then verified using classical supercomputers, allowing the now-certified random numbers to be safely used as passkeys for encrypted communications. The technique, the team notes, outputs more randomness than it takes in—a task unachievable by classical computation.

On supporting science journalism

If you’re enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Using the pictured quantum computer model developed by the computing firm Quantinuum, a team of physicists and engineers demonstrated a technique that achieves what is called certified randomness.

“Theoretically, I think it’s interesting because you need to put together a lot of technical tools in order to make the theoretical analysis fly,” says Hung, now an assistant professor of electrical engineering at National Taiwan University. “Random-number generation is a central task for modern cryptography and algorithms. You want the encryption to be secure and for the [passkey] to be truly random.”

When it comes to Internet security, randomness is a weapon—a mathematically impenetrable shield against malicious adversaries who seek to spy on secret communications and manipulate or steal sensitive data. The two-factor authentication routinely used to protect personal online accounts is a good example: A user logs in to a system with a password but then also uses a secure device to receive a string of randomly generated numbers from an external source. By inputting that string, which can’t be predicted by adversaries because of its randomness, the user verifies their identity and is granted access.

“Random numbers are used everywhere in our digital lives,” says Henry Yuen, a computer scientist at Columbia University, who was uninvolved with the study. “We use them to secure our digital communications, run randomized controlled trials for medical testing, power computer simulations of cars and airplanes—it’s important to ensure that the numbers used for these are indeed randomly generated.”

In more cryptographic applications, on the other hand, it’s not enough to just generate random numbers. We need to generate random results that we know for certain are the outcome of an unbiased process. “It’s important to be able to prove the randomness to a skeptic who does not trust the device producing the randomness,” says Bill Fefferman, a computer scientist at the University of Chicago, who was not involved in the new work. Implementing such protocols to check each and every outcome would be “impossible classically,” Fefferman says, but possible with the superior computational potential of quantum devices.

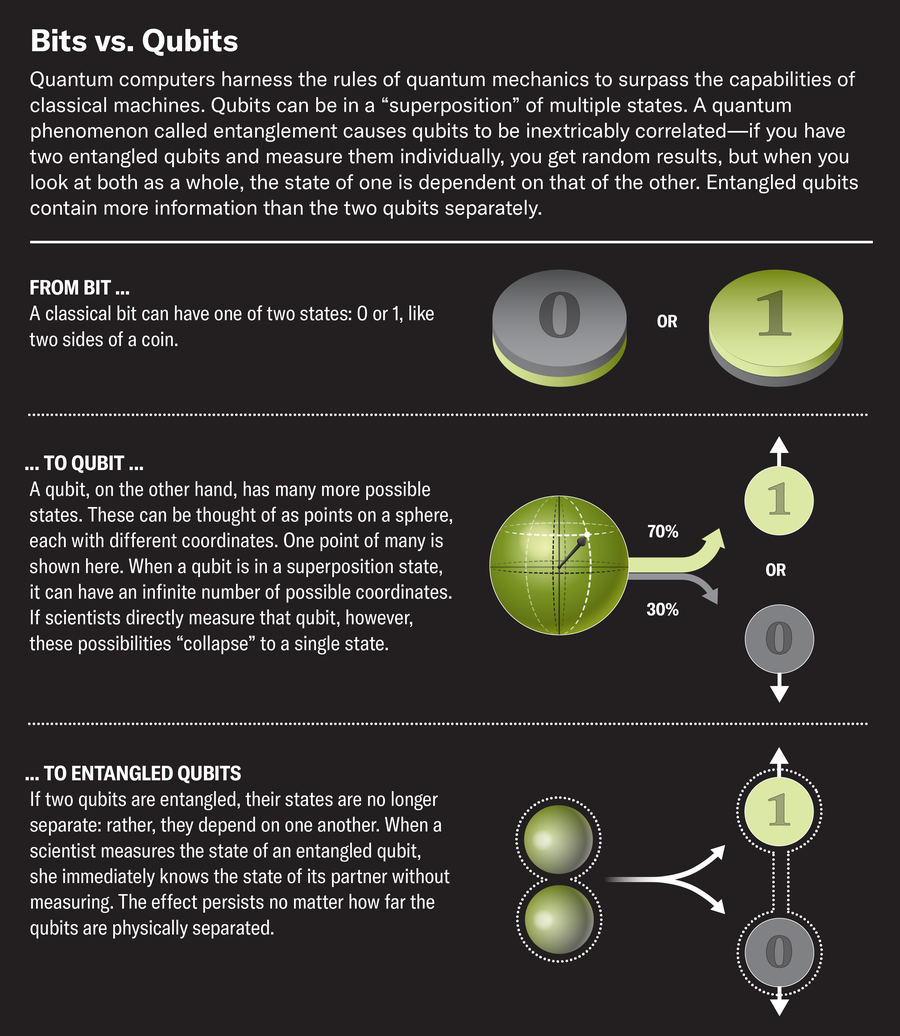

“Quantum computers and quantum technologies offer the only way to reliably generate and test randomness,” Yuen says. Unlike classical computers, which depend on binary “bits” to process information, quantum computers operate on qubits, which can have an infinite number of possible orientations when existing in a superposition state. These qubits allow quantum computers to process exponentially larger loads of data at much faster rates.

The quantum computer involved in the latest demonstration uses 56 such qubits to run the protocol developed by Aaronson and Hung. The gist of the procedure is relatively straightforward. First, the quantum computer is given a complex problem that requires it to generate random outputs, in a process called random circuit sampling. For a small enough quantum computer, usually under 75 qubits, these outputs can be traced on classical computers to ascertain that the results couldn’t have been generated classically, explains Christopher Monroe, a quantum computing expert at Duke University, who was not involved in the study.

Verifying this is the next step in the protocol, but it includes an added caveat: time. The quantum computer must generate its outputs faster than they could be mimicked (or “spoofed”) by any known classical computing method. In the team’s demonstration, the Quantinuum system took a couple of seconds to produce each output. Two national laboratory supercomputers subsequently verified these outputs, ultimately devoting a total of 18 hours of computing time to generate more than 70,000 certified random bits.

These bits were certified using a test that gives the outcomes something called a cross-entropy benchmarking (XEB) score, which checks how “ideal” the randomness of the distributions is. A high XEB score coupled with a short response time would mean that a certain outcome is very unlikely to have been influenced by any interference from untrusted sources. The task of classically simulating all that effort to spoof the system would, according to Aaronson, require the continuous work of at least four comparable supercomputers.

“The outcome of the [certified randomness test] is governed by quantum-mechanical randomness—it’s not uniformly random,” Aaronson says. For example, in the case of Quantinuum’s 56-qubit computer, 53 out of 56 bits could have a lot of entropy, or randomness, and that would be just fine. “And, in fact, that it’s not uniform is very important; it’s the deviations from uniformity that allow us to test that in the first place that yes, these samples are good. They really did come from this quantum circuit.”

But the fact that these measurements must be additionally verified with classical computers puts “important limits on the scalability and utility of this protocol,” Fefferman notes. Somewhat ironically, in order to prove that a quantum computer has performed some task correctly, classical supercomputers need to be brought in to pick apart its work. This is an inherent issue for most of the current generation of experiments seeking to prove quantum advantage, he says.

Aaronson is also aware of this limitation. “For exactly the same reason why we believe that these experiments are very hard to spoof using a classical computer, you’re playing this very delicate game where you need to be, like, just at the limit of what a classical computer can do,” Aaronson says.

That said, this is still an impressive first step, Fefferman says, and the protocol will be useful for instances such as public lotteries or jury selection, where unbiased fairness is key. “If you want random numbers, that’s trivial—just take a Geiger counter and put it next to some radioactive material,” Aaronson says. “Using classical chaos can be fine if you trust the setup, but doesn’t provide certification against a dishonest server who just ignores the chaotic system and feeds you the output of a pseudorandom generator instead,” Aaronson adds in a reply to a comment on his blog post about the protocol.

Whether the protocol will truly have practical value will depend on subsequent research—which is generally the case for many “quantum advantage” experiments. “The hype in the field is just insane right now,” Monroe says. “But there’s something behind it, I’m convinced. Maybe not today, but I think in the long run, we’re going to see these things.”

If anything, the new work is still a formidable advance in terms of quantum hardware, Yuen says. “A few years ago we were thrilled to have a handful of high-quality qubits in a lab. Now Quantinuum has made a quantum processor with 56 qubits.”

“Quantum advantage is not like landing on the moon—it’s a negative statement,” Aaronson says. “It’s a statement [claiming that] no one can do this using a classical computer. Then classical computing gets to fight back…. The classical hardware keeps improving, and people keep discovering new classical algorithms.”

In that sense, quantum computing may be akin to “a moving target” of sorts, Aaronson says. “We expect that, ultimately, for some problems, this war will be won by the quantum side.But if you want to win the war, you have to do problems where the quantum advantage is a little bit iffier, where it’s a little bit more vulnerable.”